'The cloud' is not just servers. 'Going to the cloud' could also mean locking into a forever sub-contractor

The very brief version: “going to the cloud” can mean renting services/servers that you could get from anywhere. There’s little lock-in. The same four words “going to the cloud” might also mean locking your operations to a specific cloud provider, whose proprietary services will now be part of your business processes “forever”. Be specific which variant of cloud you are signing off on!

I’m mostly out of the office but this post was already in the pipeline and I thought it might be useful to get it out. Also, as usual, a very warm thank you to the many proofreaders who provided many valuable insights and corrections!

The brief version: Software was previously deployed on servers, then on virtual servers, then on rented virtual servers. We are now massively taking what seems like the next logical step: getting “locked-in to the cloud”, where organizations base their services not just on servers but ever more on powerful non-standard proprietary third party cloud services. This however is not a simple next step, it is a fundamental change.

From hereon the cloud provider’s unique intellectual property is an integral part of the services and products that operators provide. These cloud services are not standardized or interchangeable. These are not just databases. They are not some kind of electricity grid or water supply where you can just pick new vendors. Used this way, the cloud is not just replaceable hardware. The operator’s services are then deeply intertwined with specific cloud software that operators then have to rent forever. The cloud thus becomes an eternal subcontractor.

The upside of this is that we can use much more specialized developers that don’t need knowledge of things like servers, storage, databases or the finicky internet things required to get users to sign on and log in safely. Also, many of the cloud building blocks may get providers to their destination a lot faster, and perhaps even more safely.

But the downside is that operators don’t “own” their services anymore. The hyperscaler cloud provider is now a mandatory subcontractor, an eternal part of the product, commingled with your own intellectual property. And because of specialization to the cloud, operators are quickly losing the developer skills to migrate to something else, and over time this may become practically impossible.

“Moving to the cloud” can be a wise choice, but only if operators are keenly aware of the vital difference between renting servers and databases (which is pretty safe) and moving beyond that to integrating third party intellectual property, forever, into their solutions.

Next to cloud as a platform on which to deploy software, there is also ‘software as a service’ with as grandest example Microsoft 365, which provides an entire office working environment as a service. When basing operations on SaaS, users have little operational worries left, but also only the tiniest measure of control over the future, or over where data goes.

Clever operators will restrict their usage of hard to replace cloud services. This can be done in two ways: limit the number of dependencies, or make sure each service is also available as software that could be run on other servers/clouds, and make sure the solution also runs on these alternatives. Taking either of these two measures is work now, but will pay off tremendously later.

Added to the above are geopolitical considerations - it is highly inconvenient if mandatory third party services that form an integral part of your software can only be sourced from a degrading democracy across the ocean, traversing trade war barriers.

These cloud choices are very difficult to make, yet we currently see them typically being made on an ad-hoc basis, at relatively low levels in organizations, places where geopolitics and long-term strategies are not often discussed. Conversely, in some other (government) places, the official policy is now ‘Azure, unless’, which also does not offer the fine-grained guidance required.

Senior management should take an avid interest in these tricky things, allowing developers to benefit from the good things that ‘cloud’ has to offer, while sidestepping potential future embarrassment and possible crises.

This post is a followup to:

The longer story

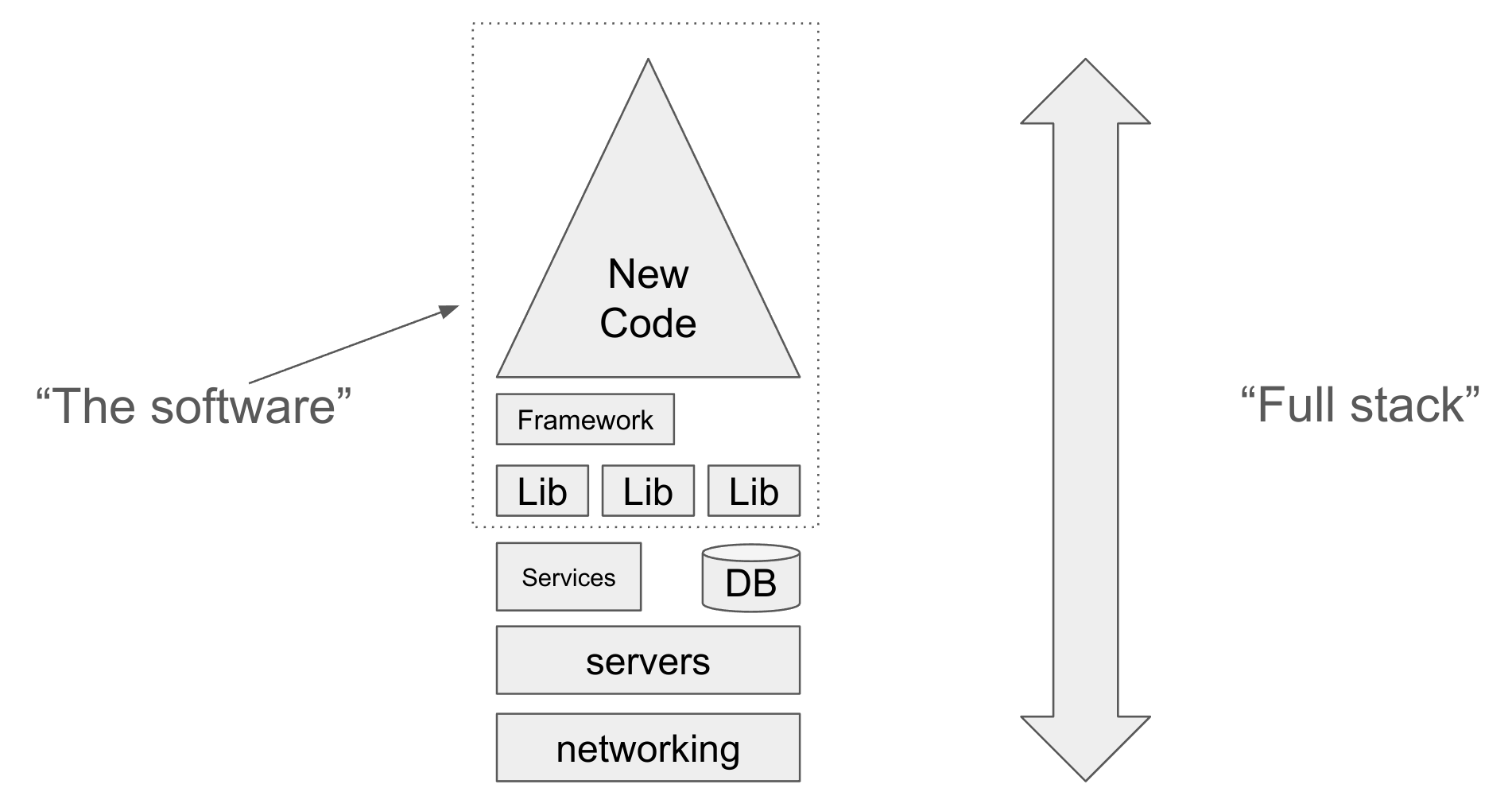

From the 1970s to the 2000s, operating a software based service looked like this:

This stack contains original code (the triangle), which builds on frameworks and libraries that are typically licensed to us eternally. This means we have enormous control over what is going on. The combined software meanwhile runs on databases and services which we also run ourselves.

While companies like IBM have always been around to make this happen, somehow a team had to be assembled that could take care of everything from transistors to lines of code that contain the logic of the service. To this day, it is feasible to work like this, and the very largest providers of services do so. A well known example is the Netflix streaming service, which consists of Netflix-owned hardware that streams stupendous amounts of video using very limited space in a data center. Netflix would simply not be economical if they did not have full control over the stack.

Servers and their data centers are a hassle

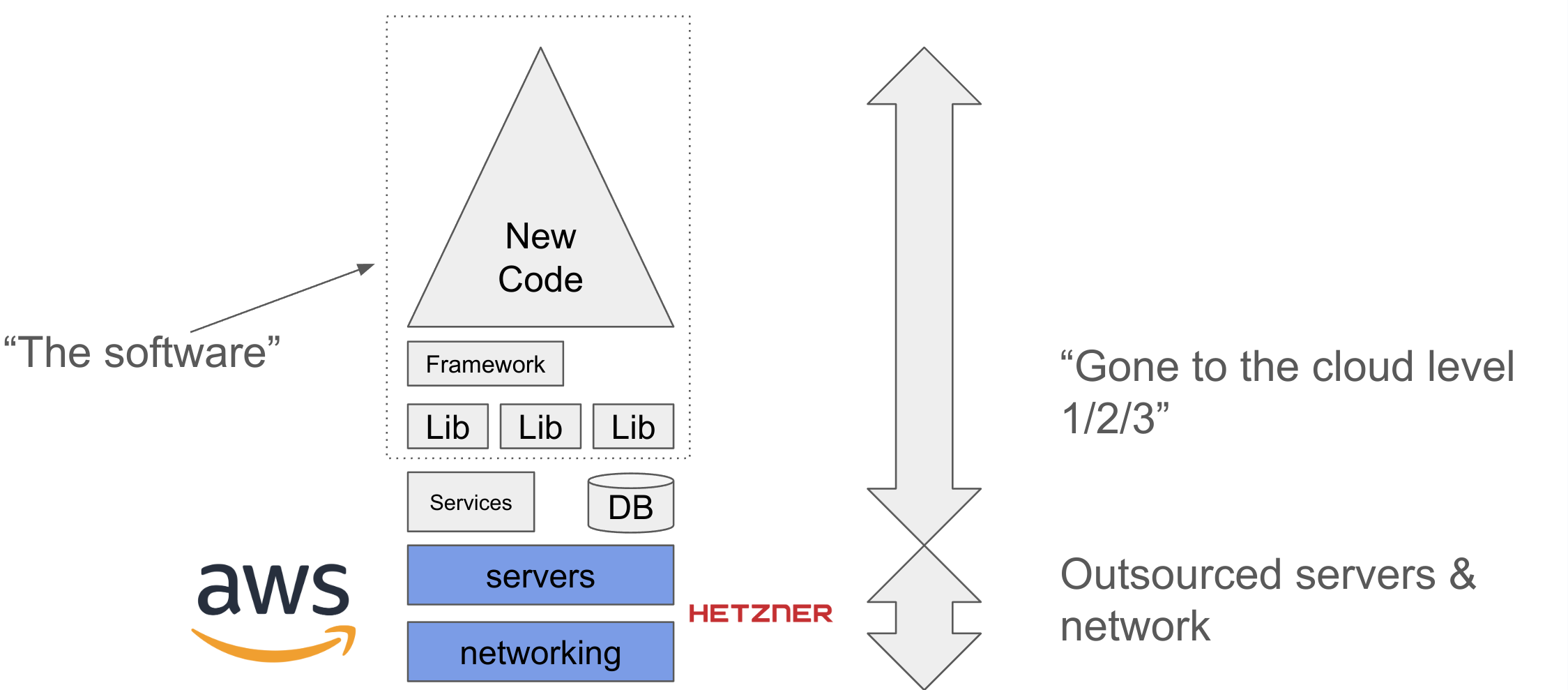

However, taking care of physical servers is a hassle, and the data centers that house them are entire projects themselves. It is entirely reasonable in many cases to get someone else to provide you with rented servers. This already entails a privacy risk however depending on how you deploy things. However, given the right partner & privacy legislation this could be a good idea:

Note that the dotted rectangle called ‘software’ is still entirely ours. Doing it like this has rid us of the need to maintain servers, but has not significantly altered our way of working. One change is that we might be using servers much more dynamically (perhaps via containers), which could lead to significantly higher amounts of compute power being consumed.

The cloud level 1/2/3 from the diagram refers to the terminology from this earlier post.

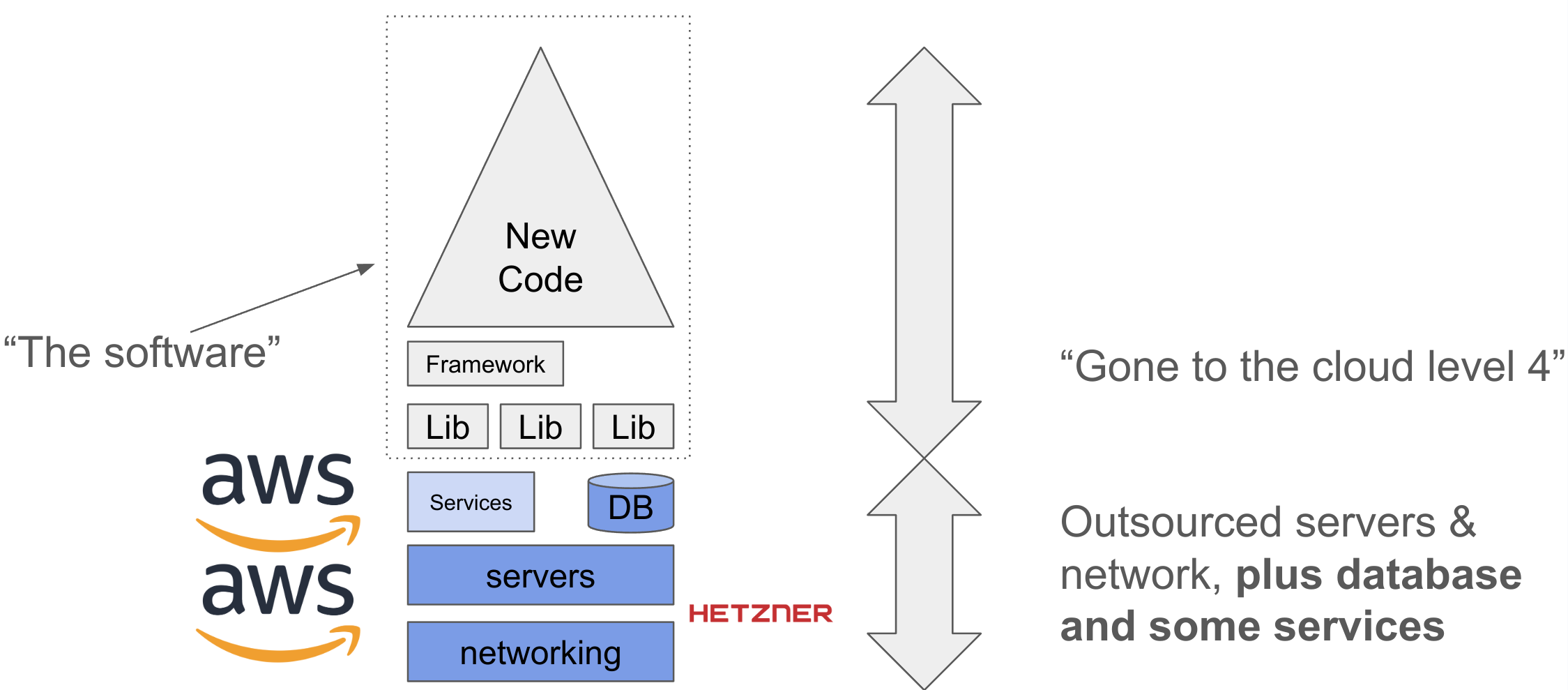

Using some cloud software services

Next up, we could decide to let a cloud provider offer us some commodity services. Object storage, database, web hosting, key/value stores. This frees up the need to do maintenance on these things ourselves, and gives us someone to blame instead:

Key to this level is that the ‘software’ component is still fully ours. We could move somewhere else, we only need to find another provider for the relatively basic “fungible” services we are consuming.

Meanwhile, this mode allows developers with no skills at setting up databases to still be productive.

It is of course not that easy to figure out which services are commodity enough. And with a few sub-optimal choices, we head straight to the next and remarkably different level of cloud.

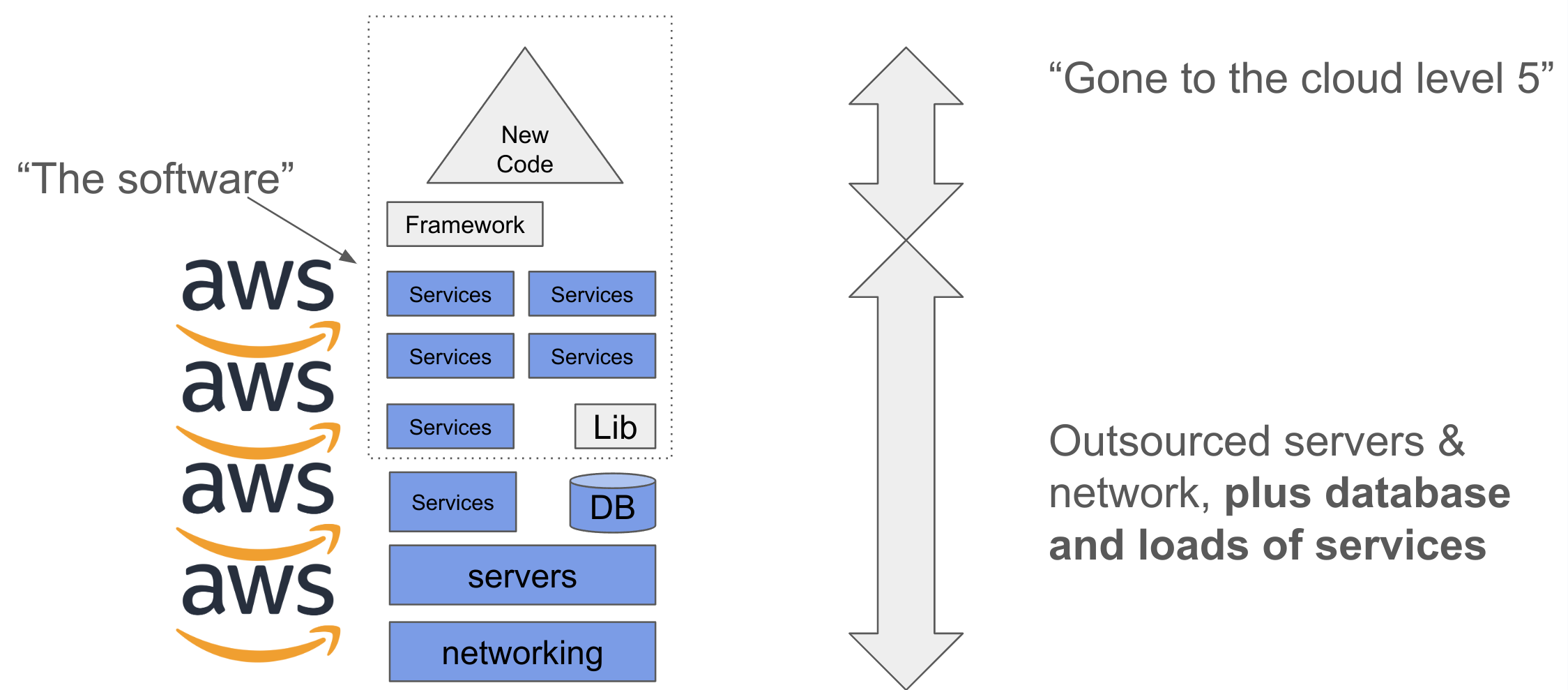

The cloud as software provider

Here we shift to an entirely new world. It is in a way terrible that all of this is called ’the cloud’. In what follows, the cloud becomes our software provider, paid per use:

Note that the cloud services have now entered the software rectangle. Our developers are making use of significant components which provide essential services. These services are not commodity (like database or computing power). These are services that may have equivalents elsewhere, but they are not wired in the same way. This is not plug and play. And to some extent, some of these third party services may well end up forming the backbone of our product.

The cloud provider is now not just your subcontractor, you are effectively in business together. Except that the cloud provider can set the rates and conditions under which this partnership proceeds. And because modern developers are often wedded to a specific cloud provider’s technologies, they may have a hard time helping you migrate to something else.

It should be clear that this is an undesirable state, except for easily replaceable non-core activities.

In places like the pharmaceutical and broader chemical industry, companies have long learned to not depend on a single supplier. There they spend significant effort to root out dependencies on single vendors or even single shipping lanes. The IT industry in the past also worried about these things, leading to such odd ducks as the AMD Intel 80286 chip. Operators demanded that an alternate supplier of their key technology existed, and they got it.

These days under the guise of ‘cloud as a commodity’ we are gluing ourselves to specific suppliers, without realizing that they are not in fact supplying commodity services.

Locked-in to the cloud? Is there a better name?

Many experts recognize there is a vast difference between relying on ‘fungible’ cloud services you could get anywhere (servers, storage, database), and going further in and tying yourself to a specific cloud, while benefiting from more advanced services.

Yet there appears to be no solid separate name for this second kind of cloud use. I’ve previously used ‘fully cloud native’ but that term has been co-opted to mean almost anything. Other people like ‘platform as a service’ (PAAS), but if you look at the definition of that used by AWS, Microsoft and Google, it is also not so useful.

If there were a good name, this might actually help people make better choices - are we renting servers, or are we entering the locked-in cloud?

Intermezzo - with the right skills, you can get into and out of a cloud again

It is indeed the case that if you have lots of clever architects and more or less “full stack programmers” that you can prevent this problem (total dependency) from happening in the first place, or that you can rapidly migrate away should a cloud partner become problematic.

However, in many cases companies go “full in/locked-in” on a cloud partner precisely because they no longer have such clever staff, or are bleeding such people at a rapid clip. So yes, if you have the right people, this is less of a risk - but you need to be sure that these right people will stick around, which is doubtful if you’ve decided to outsource most of your technical needs.

Corey Quinn wrote on this problem in 2020 already, “The Vendor Lock-In You Don’t See”. Very worthwhile piece.

In general, it is very hard and traumatic work to untangle a working solution from one platform to another. And this holds very much for cloud providers, who don’t just provide electricity or computing power, but large amounts of tricky and very specific services. This requires lots of skilled people that know about more than one cloud, or can even work without. But those folks are typically long gone when problems show up.

Experience shows that companies have a tremendously hard time ‘reskilling’ when the time comes. Boards and middle management have by that time lost their technical management chops (or even interest). HR doesn’t know how to recognize good technical people anymore. And the working conditions are also no longer attractive for prime geek material.

As a concrete example, one place I know has forgotten that developers need actual computers on which they can develop. So they are now outfitting developers with locked down desktops that run Excel and Word. But they can’t run an IDE or a compiler. The organization thinks you can get people to do software development on computers that don’t have the software to do so.

‘Repatriating’ your workloads back onto infrastructure you control is going to be very hard work.

As an aside, some people claim that operators should strive for multi-cloud operations, which is one way of not getting locked in to a specific cloud provider. Most organizations that go to a specific cloud provider however do so precisely because they lack the skills to do such complicated multi-cloud operations. It is easier to restrict cloud dependencies than to try to hedge against them with multi-cloud architectures.

Striking the balance

Governance should be applied to which cloud services developers can use for production services. Such choices are currently often made ad-hoc and in the wrong rooms. The future harm arising from poor cloud choices is typically not a large factor for cloud architects, who are tasked with delivering services now. We must not expect computer programmers to stay abreast of geopolitical considerations far removed from getting their work done.

Despite this, it is currently nearly unheard of to apply such governance from the top of an organization to development departments. There is also little operational precedent for such things working well. Instead, we sometimes see no policy at all or a blanket ‘use AWS, unless there is a specific reason not to’.

Key things to look out for when consuming specific cloud services:

- Is the service also available as software, so we could run it ourselves if need be, or could someone else run it for us?

- Can we architect our thing such that we have the ability to change to equivalent services, even though the APIs and perhaps semantics are different?

- Does the service work itself deeply into how our solution works? For example, does the cloud provider now provide the identity for all our customers? Are our customer(accounts) really ours anymore?

- Could we get our data out again seamlessly? Or do we have to get all our users to change passwords or perform other work to complete the migration?

- Do we really need this service? Perhaps our database also has this functionality?

If it turns out to be too hard to apply this fine-grained governance, another possibility is to decide not to use any cloud services beyond computing power / containers and databases. Developers must then find software solutions for their needs, and get these deployed on cloud servers. This is more work, but it might eventually also be a lot cheaper.

Either work on improved hands-on governance, or get developers to do more work. But in both cases the benefits can be massive.

Because the world is not getting any simpler

Servers can be rented anywhere. Giving a supplier access to your proprietary, secret, perhaps healthcare related data is always a scary decision. Until recently, handing over your deepest secrets to Microsoft, Google or Amazon was considered a reasonable strategy, especially when sufficient money was spent on impact assessments. These assessments should however have taken into account that the providers would end up coming from a country that has decided that your continent/European union is “very nasty” though.

Picking a supplier you can’t get away from in short order, from a country that is turning remarkably unstable, may not be the greatest idea.

Summarizing

“Going to the cloud” can mean many things. This also effectively means that “going to the cloud” is not a complete decision. If it means “we’re going to rent servers dynamically”, it is a rather innocuous thing. However, these same four words could mean picking Google as an eternal subcontractor that now controls access to our customers, forever. In this case, “going to the cloud” represents handing over your future.

It is highly confusing that both these activities go by the same name. Cloud providers are also not highly motivated to point out which of their services are (terminally) sticky. And in fact, given their pricing models and free tiers, it appears almost as if hyperscaler cloud offerings are designed to lock users in as tightly as possible.

With the right attention and choices, good use can be made of the cloud. However, since most of the potential problems are in the future, companies might have a hard time applying sufficient quality governance, since this costs real money right now.

When in doubt, an easy way out is to only use cloud services that are actually widely available, nearly identically in many places.

But in any case make sure a decision that sounds like “we’re going to rent easily replaceable servers” does not end up as “actually we picked Google as our eternal partner, and they own the customer credentials now”.