AI: Guaranteed to disrupt our economies

This is a machine-aided translation of this Dutch post. Und jetzt auch auf Deutsch verfügbar!

Everyone is tumbling over themselves making predictions about AI. It’s going to free us from menial work, it’s going to dismantle our education, we all won’t have to learn things anymore because the AI will do it for us, criminals will trick us with it, crooks will create endless amounts of disinformation with language models, and the AI will escape and become dangerous in the real world. Who knows.

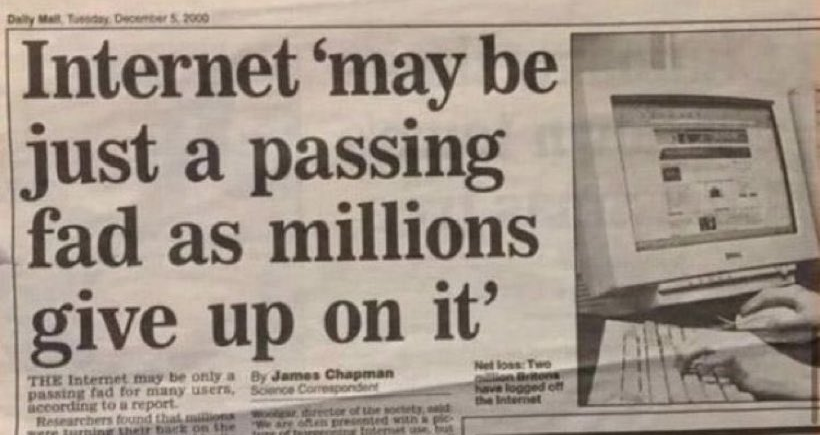

Predictions are tricky, especially about the future. I myself have proclaimed that anyone making ChatGPT forecasts is now making a total fool of themselves.

5 December 2000. Any day now.

This is because people are notoriously bad at seeing the future, even if all the facts are on the table (and they usually aren’t).

Today, it is my turn to make a fool of myself with a prediction about AI.

I will limit myself to some very simple scenarios, where neither humans nor AI need to have malicious plans, and yet things go horribly wrong.

Really intelligent?

There is much debate about whether ChatGPT & competitors are ’truly intelligent’, and also about them still making too many mistakes. Some people even claim that AI has become sentient (and are then fired by Google). These are fascinating discussions.

It seems that our experience with calculators, which can calculate better than us in every way, has raised the expectation level for AI quite a bit. If ChatGPT is not your personal perfect pocket Plato it apparently does not amount to anything yet.

The debate over whether AI is truly intelligent, whatever that means, is great fodder for philosophers. Back in the 1980s, this was treated comprehensively in the Chinese Room Argument: is a room that can answer questions in Chinese using mountains of paper instructions “intelligent”? Anyone who wants to opine on AI would do well to re-read that discussion, so you can at least share a fresh opinion with us.

AI & the economy

As a kind of coping mechanism, there’s a lot of booing about how specifically ChatGPT is no good:

- It is not really intelligent

- What comes out is not always correct, you have to check to make sure

Well I have some amazing news. Companies don’t give a hoot about this. Maybe AI is not intelligent, but at least it does not whine about working hours, raises and that the office can be drafty.

Is AI good enough to deploy now? Has that ever stopped companies from trying anything? Because that’s the crux: can an AI application reasonably assess CVs by the thousands? When people do so by hand they also suck at it, so AI might already be better.

Insurers spotting problematic customers? Should be reasonably successful already. Helping customers with their product queries? My guess is that ChatGPT (which actually did read your manuals) likely already does a better job than your as-cheap-as-possible-helpdesk. And medical advice for when your doctor doesn’t have time for you in the next few weeks? I have no doubt it is going to happen.

Is that good? No – companies will presumably move too fast. After all, companies have no legal duty to think about the social effects of what they do. If something is not prohibited, it is allowed, and if it saves money, it will be done.

By the way, for those who think “nobody will leave important decisions to mediocre AI anyway”, the municipality of Rotterdam already did so. And that was local government, who supposedly act in our interest.

What can happen in the short term

The biggest advances in AI are now being made in China and America. Europe plays a very modest role (although many of the inventors are Europeans, but they now live in the US).

It is obvious that our companies will be plied with AI solutions that will make helpdesks obsolete. There will be services to scan customer records for at-risk customers, or just customers who need more attention.

Apart from huge rounds of layoffs among specific professions, this will also lead to a yet bigger flow of our private data to “the Cloud in America”.

And after that?

Computers will become “good enough” at more and more jobs. This is a very unpleasant prospect, and to give ourselves some hope, many come up with the argument that “AI systems are wrong sometimes”.

But have you ever worked with people? These also suffer a lot from that problem.

Meanwhile, AI technology will definitely not get any worse in the coming years. The pace of innovation still seems to be increasing – we are far from hitting a “wall”, as happened during the AI winter in the 1990s.

In time, this will almost certainly lead to major economic disruption – how will we cope when computers can take over so much of our work at such a rapid pace?

But it’s always gone well up until now, hasn’t it?

The industrial revolution is often cited as a successful example, incidentally only by people who have not personally experienced it. The outcome brought us much, but during the revolution conditions for the new workers were horrendous. It took generations before there was progress for the majority of the population.

So when anyone says it has always worked out in the end, also take a look at how long it took and how things went in the meantime.

I see a lot of people being 100% certain that it ‘always works out’, but I’m not so sure. It is not guaranteed that we can all become ‘prompt engineers’ and Instagram coaches.

What should we do then?

Very good question. For a start, it would help to try to predict which sectors of the economy are likely to be disrupted first. Goldman Sachs, a bank, has already done a survey, for example.

One can also think of drafting policies on collective dismissals. In most European countries you need to go through a procedure to lay off whole departments. Possibly “replaced by ChatGPT” should not be an admissible ground for that for at least a while.

Further, if new jobs are created, it is a good idea that those jobs are actually on this continent. However, you can safely predict that the AI services we are going to use to fire entire departments will be sourced from the US. In fact, in Europe, we immediately started tightly regulating AI, almost guaranteeing the innovation won’t come from here.

I’m a huge fan of the EU (check the hat), but I also know how this kind of regulation tends to work out: we enforce it for our own organisations, but let US companies have their way. For example, see this nice explanation of the GDPR:

The Spice Girls (a band from the 1990s) explain the GDPR

We are not innovating that quickly in Europe anyway, and things are not looking very good for AI either.

It would therefore make sense to be pragmatic about regulating AI to at least ensure that there is still a chance of it generating employment here (since we’re not going to outlaw the use of AI).

And what if it does get out of hand?

In the above piece, I assume an AI without malicious intent, and look at the economic effects if companies just do the expected things. These are relatively straightforward predictions.

But it is also quite possible that things really do go wrong. I have now played with Large Language Models long enough to know that we don’t know what these things are doing internally. Even though it’s just an algorithm, it does actually get angry at you, for example. A philosopher might tell me that numbers cannot be angry, but if you let those same numbers make real decisions, those decisions suddenly are “angry” for real.

This has recently been well described as the “Safe Uncertainty Fallacy”:

- The situation is completely unpredictable, and we have no idea how it will turn out

- So it will be ok

It doesn’t work that way, of course. There is cause for concern.

But, ChatGPT is not intelligent and it is not always correct!

Yes I understand – but it doesn’t need to be for the above scenarios. We already have a hugely disruptive situation if AI is only “good enough” or at least much cheaper for some applications. And we will have to deal with that anyway.

And I hope we won’t stick our heads in the sand over how AI will disrupt our economies.